- Joined

- Nov 6, 2020

- Messages

- 17,656

You don't need intelligence to draw images anymore than you need it to generate text. (Reddit proves that every day.)But yeah, Increasingly Nervous Man is saying exactly that, because intelligence requires a cognitive model of the world, or at least the given task.

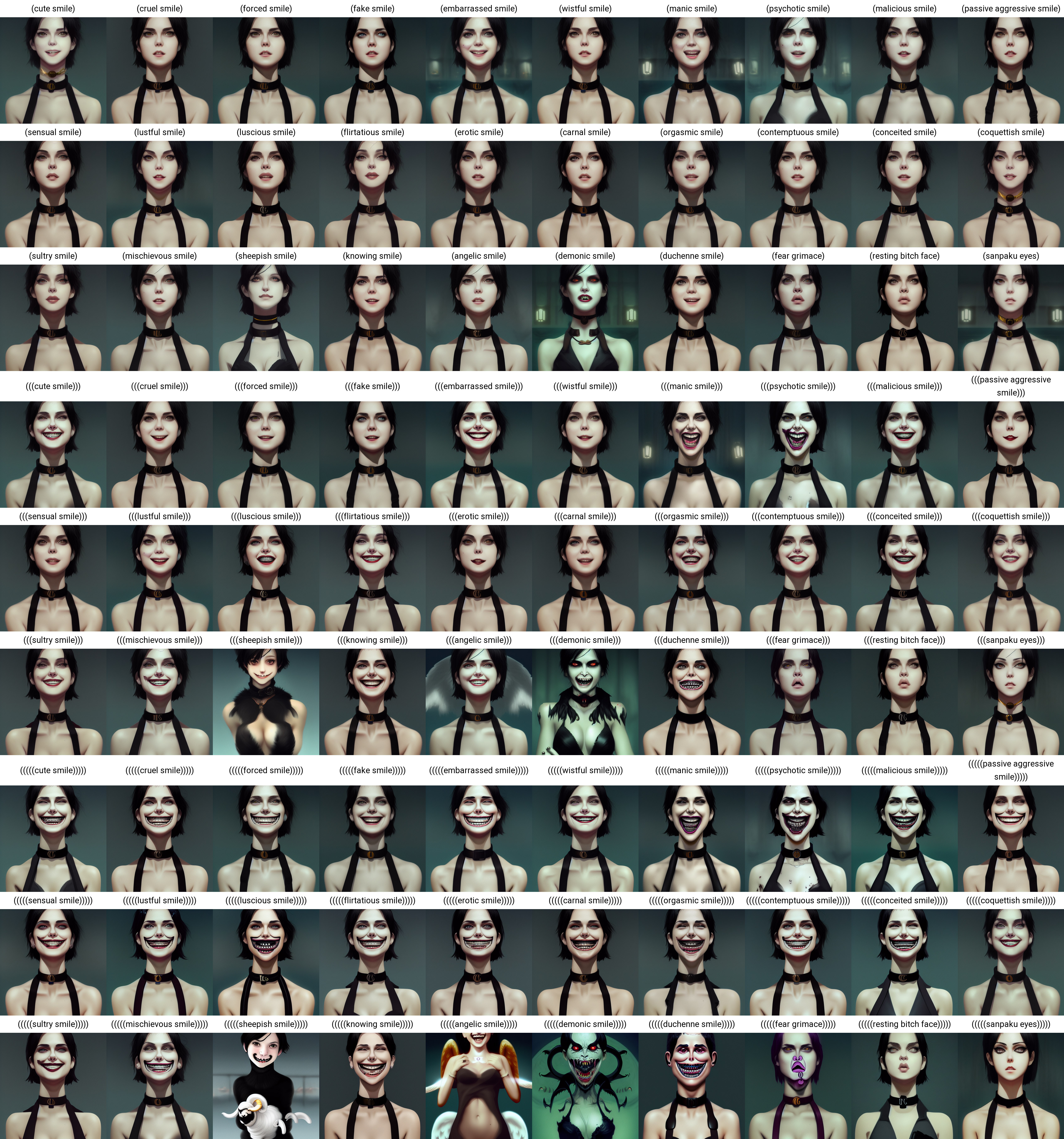

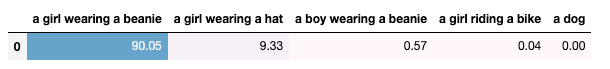

You can give all sorts of examples of the AI failing to generate something ridiculous - like, say, a dog wearing VR goggles - but it doesn't change the fact that it already can and does generate useable or near-usable images and has been doing so for months.

You can say "AI won't work" until you're blue in the face, but the fact remains that it is working. Just like GPT is already being used by writers, social media companies and gamers, and just like game devs are already beginning to use AI voice actors.

Funny, but the Roomba not having intelligence hasn't stopped people from buying it. Almost like there are other ways to work around problems besides truly intelligent AIs.A household robot that does the laundry but never puts the dog in the dryer is a great thing to aspire to but it does require intelligence.

The Roomba has no "mental model" or understanding about any thing in your house. It just knows the boundaries it's supposed to draw in and stops when it hits an object. No dogs vacuumed up yet.

These AIs can similarly be wrangled into doing what you want them to. Whether with sketches, training or clever prompts. All without anything capable of passing a Turing Test.

This thread really isn't the best place for this discussion though.

Last edited: