Sitra Achara

Arcane

- Joined

- Sep 1, 2003

- Messages

- 1,860

![Glory to Codexia! [2012] Codex 2012](/forums/smiles/campaign_tags/campaign_slushfund2012.png)

![Have Many Potato [2013] Codex 2013](/forums/smiles/campaign_tags/campaign_potato2013.png)

![The Year of Incline [2014] Codex 2014](/forums/smiles/campaign_tags/campaign_incline2014.png)

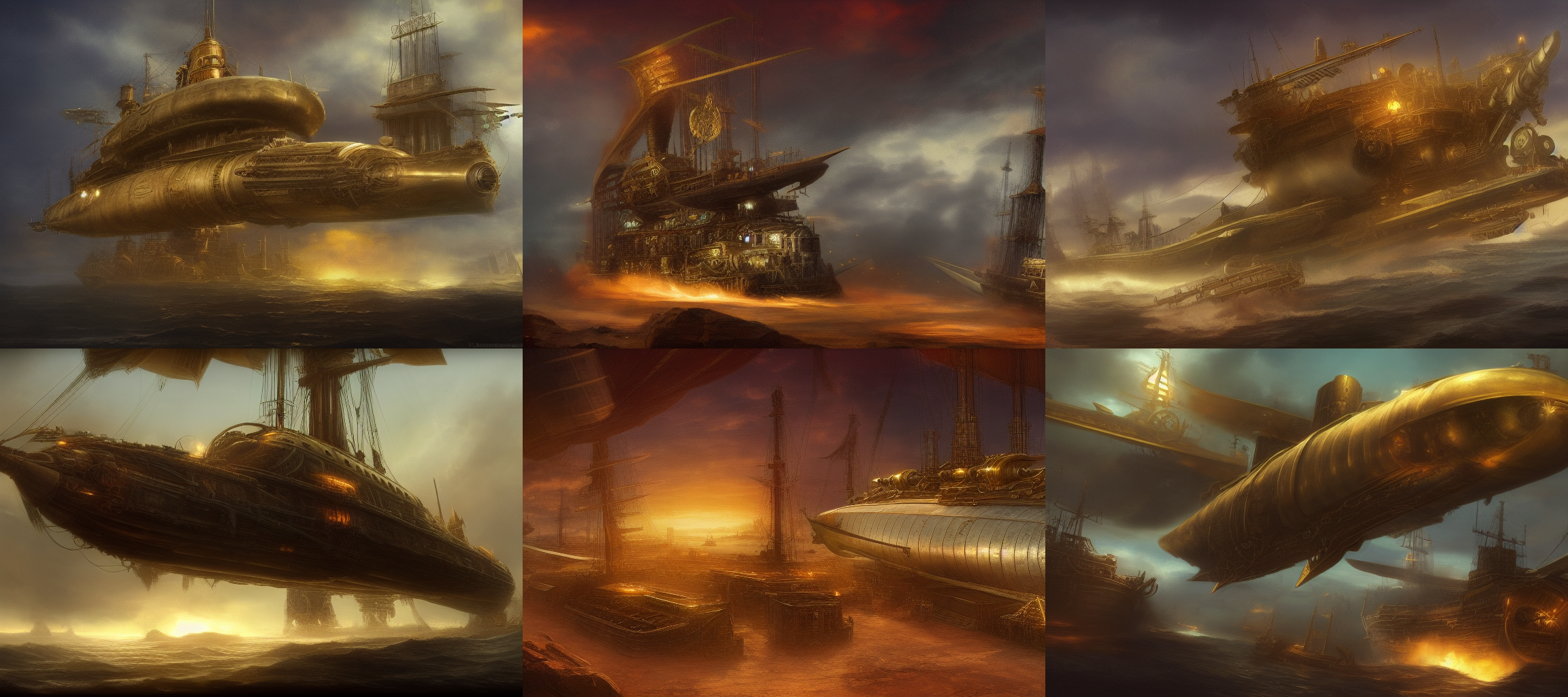

Afaik it was trained on this:

https://laion.ai/blog/laion-5b/

So you could download it, but it's 240TB so good luck with that...

https://laion.ai/blog/laion-5b/

So you could download it, but it's 240TB so good luck with that...