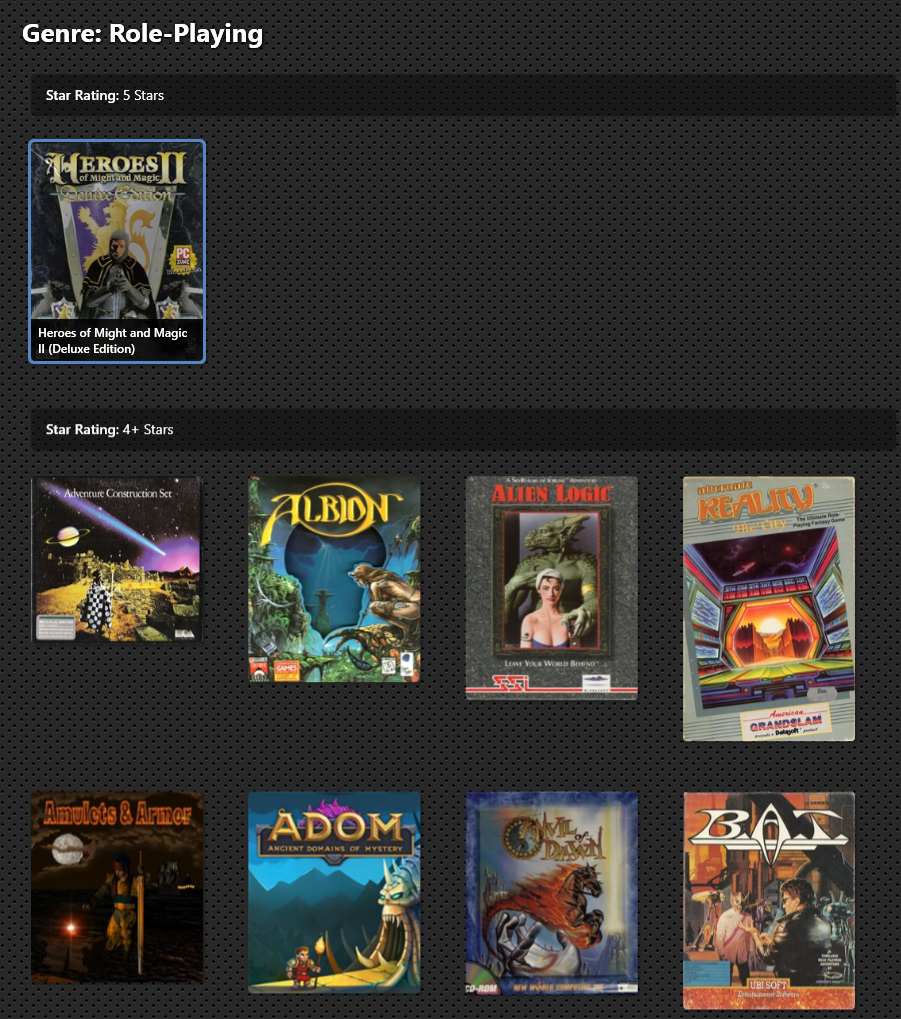

Sure, and you can do so with the below configs. Make sure to use the latest dev build.But that is the thing, this is exactly what I would like to see without shaders to have a basis for comparing their effects.

You wanna compare the "sharp" shader with vertical integer scaling enabled to the CRT-shaded output. This way the "sharp" shader always does integer scaling vertically, and *very very minimal* interpolation horizontally (with max 1px wide "interpolation band" at the sides of the pixels—this is *not* bilinear interpolation).

Auto CRT shader:

[render]

glshader = crt-auto

integer_scaling = vertical

Sharp shader:

[render]

glshader = sharp

integer_scaling = vertical

Try it and you'll see. I've been doing such comparisons for many hours when tweaking the shaders to roughly match the brightness of the "raw" output.

If you'd do this with the so-called "pixel perfect scaler", the results wouldn't match up for all resolutions, except perhaps if you have a 4k+ monitor. E.g. 320x200 can only be 100% integer upscaled to 1600x1200; that's a no-go on 1080p. I recommend reading the linked ticket very carefully, making sure to understand all points if this is unclear.

EDIT: Maybe you're slightly confused by the word "shader". On a modern GPU, whenever you display any image, that *must* use shaders (I'm simplifying this but that's the gist of it). So if you wanna do say "display raw pixels at 2x integer scaling", yeah that will also be a very simple shader! Shaders in general != CRT shaders. Technically, what `glshader = none` does is it *still* defaults to an extremely simple shader, one of the simplest that there is, that simply uses the standard (and bad) bilinear interpolation we all know.

Last edited:

![The Year of Incline [2014] Codex 2014](/forums/smiles/campaign_tags/campaign_incline2014.png)