-

Welcome to rpgcodex.net, a site dedicated to discussing computer based role-playing games in a free and open fashion. We're less strict than other forums, but please refer to the rules.

"This message is awaiting moderator approval": All new users must pass through our moderation queue before they will be able to post normally. Until your account has "passed" your posts will only be visible to yourself (and moderators) until they are approved. Give us a week to get around to approving / deleting / ignoring your mundane opinion on crap before hassling us about it. Once you have passed the moderation period (think of it as a test), you will be able to post normally, just like all the other retards.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why don't indie devs use AI-generated images as art?

- Thread starter Humanophage

- Start date

Still trying to figure out what the "best" combination of settings is but I've gotten satisfactory results so far.

Stable DIffusion?

V17

Educated

- Joined

- Feb 24, 2022

- Messages

- 350

This has been my experience as well. If you give it a very simple prompt and let it kind of do what it wants, it can give you stunning results, reasonably real looking people etc. But as soon as you need to wrangle it into creating something more specific or complicated, with more different features on the image, it becomes a struggle. For example I tried creating a "photo" of a building with a large white billboard with text on it, so there was usually a part of some street with a few cars, streetlights and various other city things. It could not produce anything that didn't immediately look incredibly AI-like, with recognizable shapes that fall completely apart on a closer look.[...]

I'll give it another shot, but I think it is better to illustrate "regular fantasy/medieval scenes" than unusual ones.

As for game assets, I don't think we are there yet at all, even though some have used it to generate tilesets.

However ask it to create a portrait of a rustic old Englishman and you'll get it and it will be actually decent.

Made by Stable Diffusion

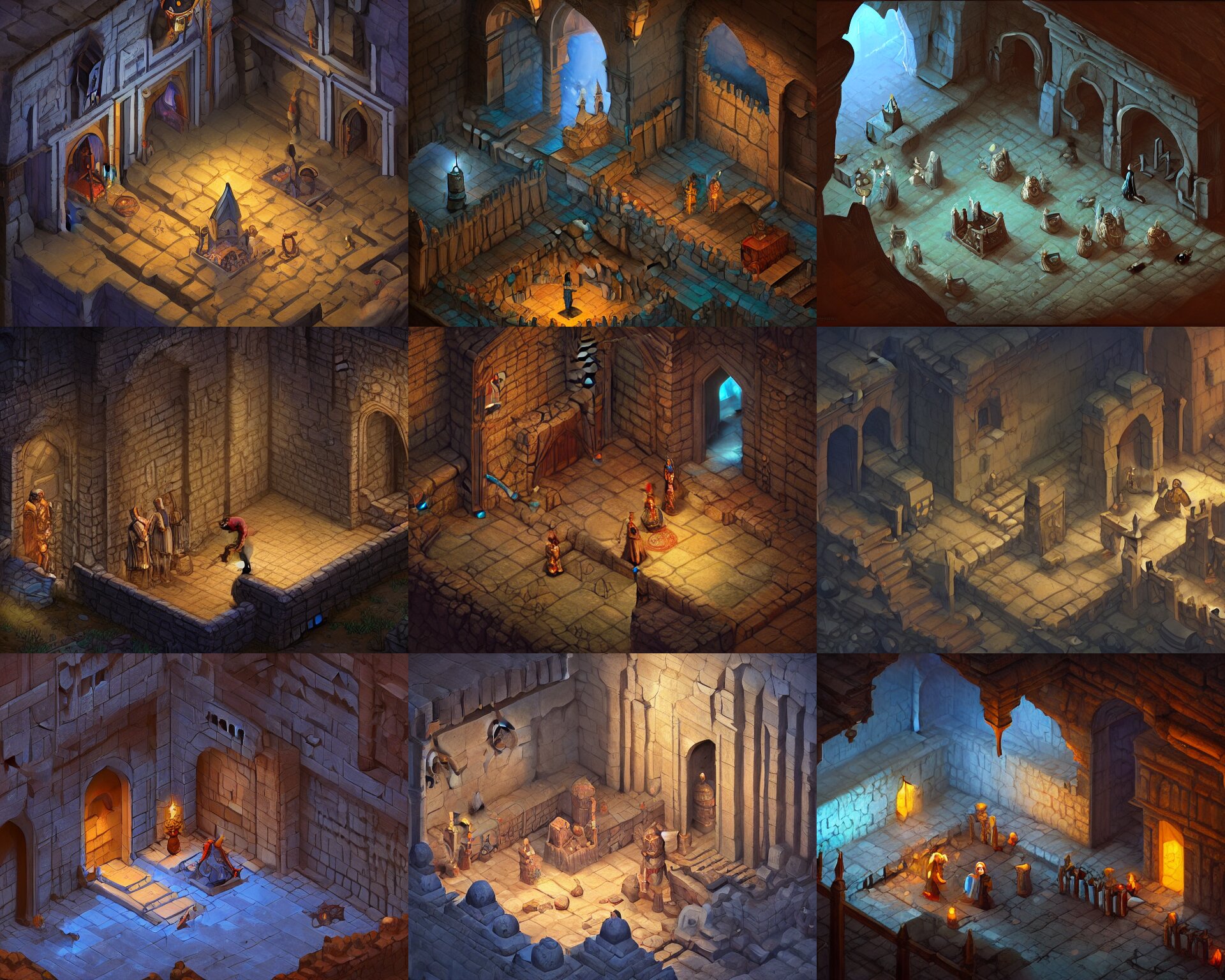

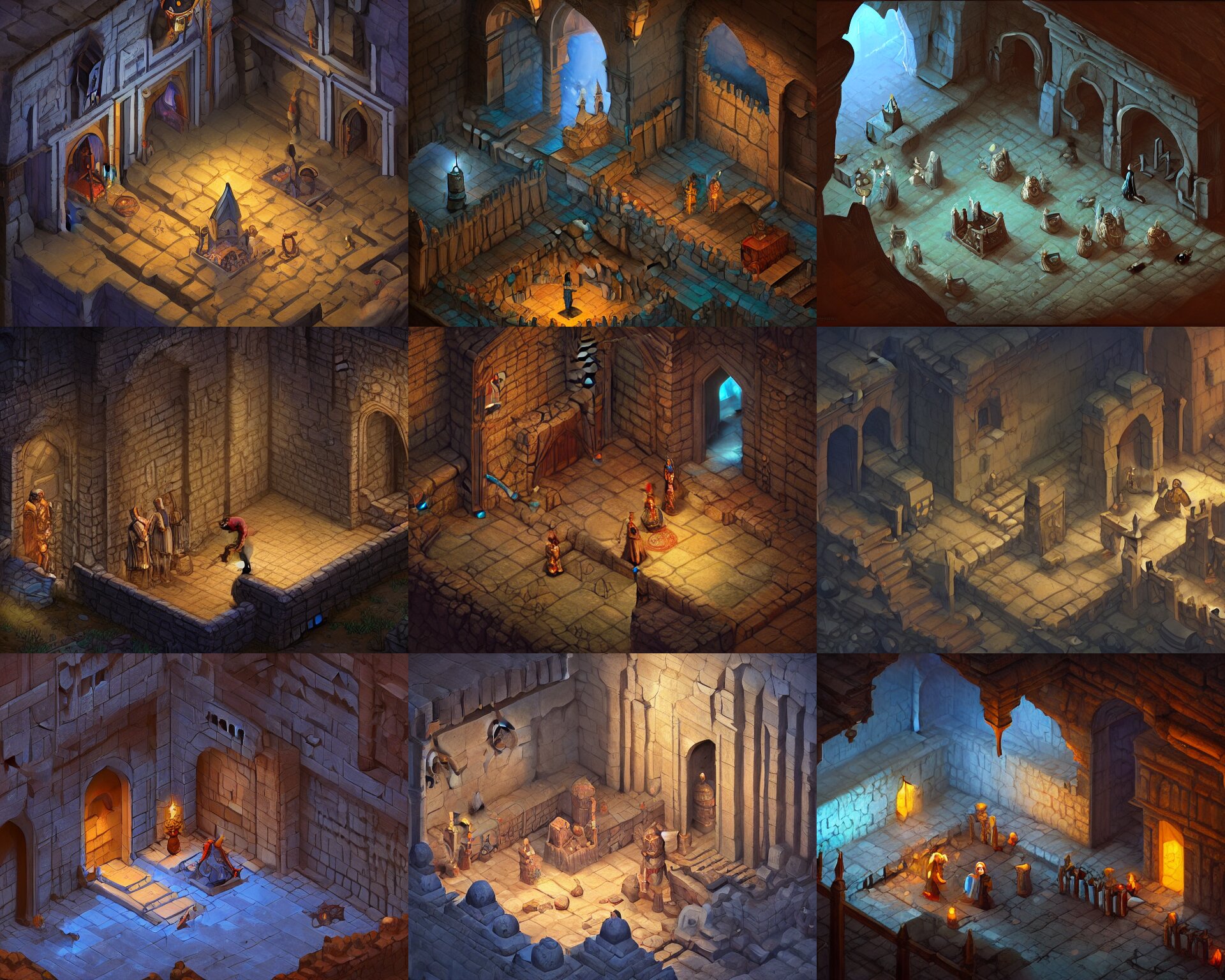

Prompt: A digital painting of an isometric medieval arabic fantasy dungeon room on a plain black background by justin gerard, paul bonner, 2 d game art, isometric, arabian nights, highly detailed, pale blue backlight, digital art, artstation hd

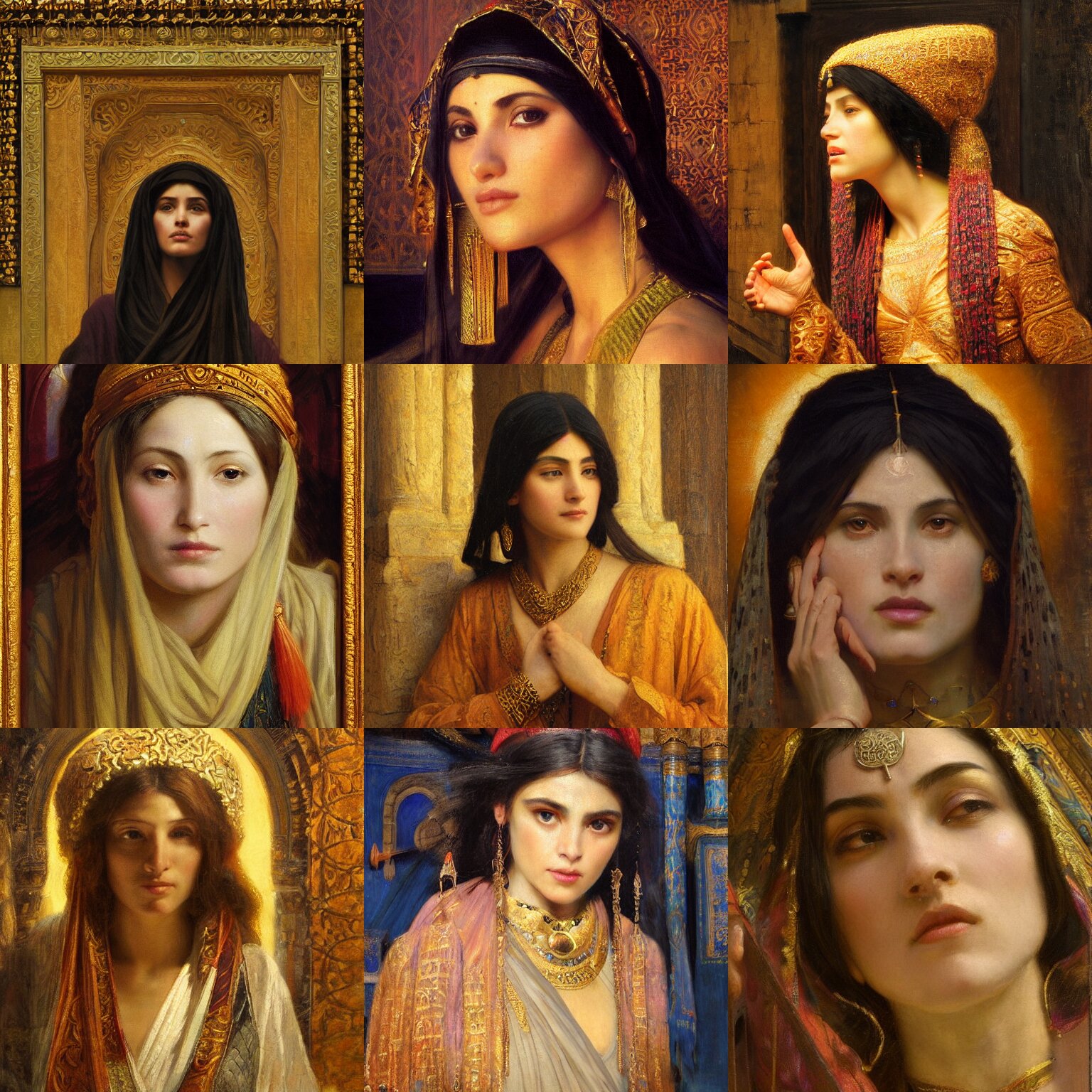

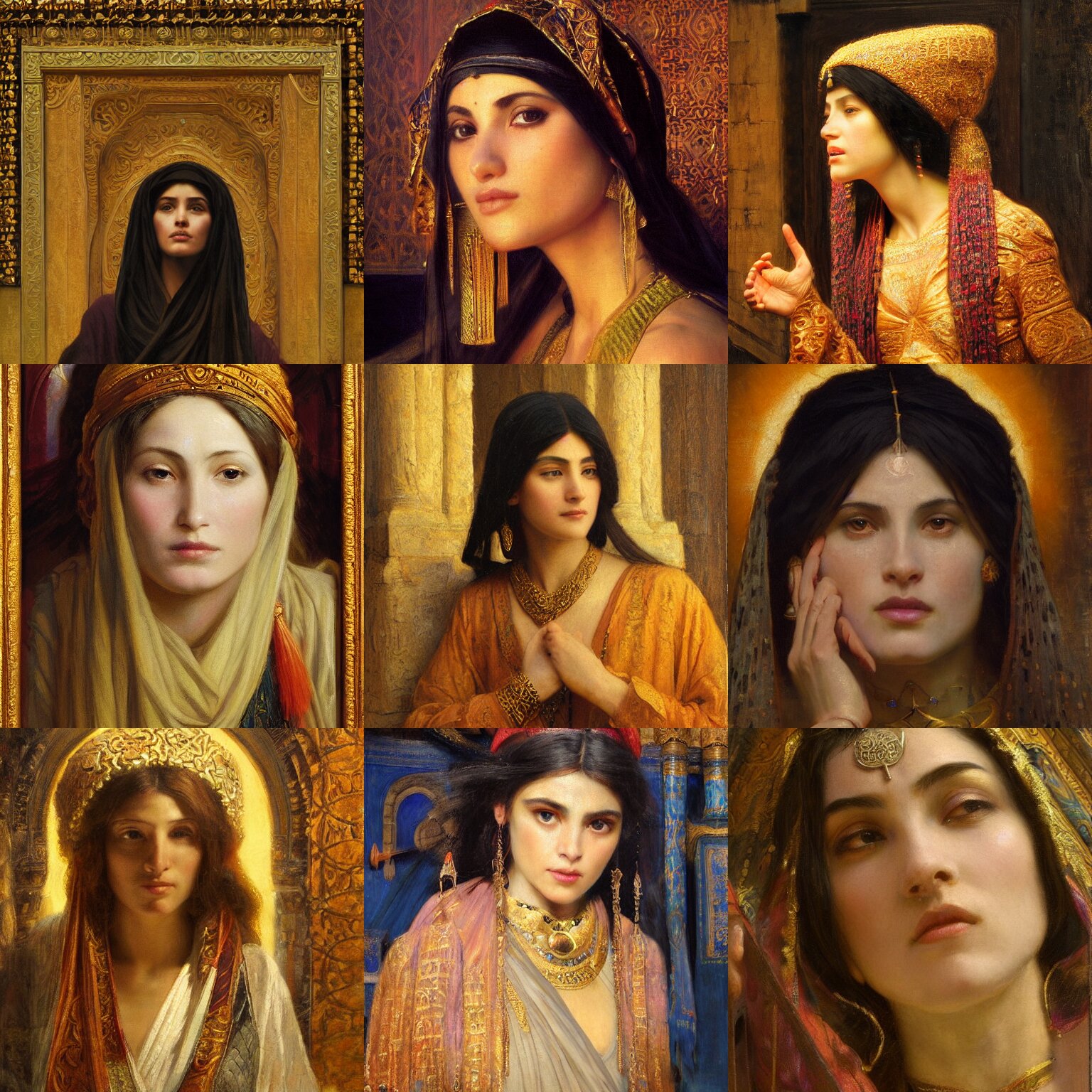

orientalism painting of a female wizard in a temple face detail by edwin longsden long and theodore ralli and nasreddine dinet and adam styka, masterful intricate art. oil on canvas, excellent lighting, high detail 8 k

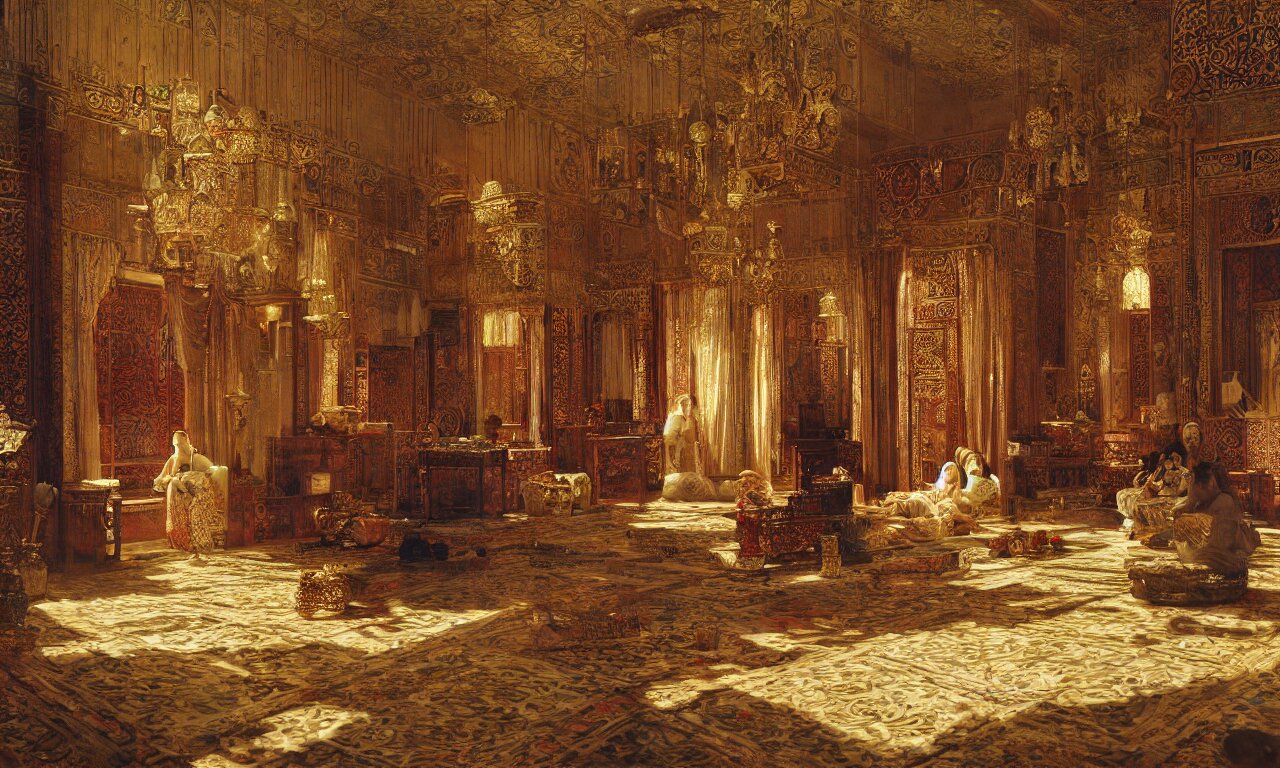

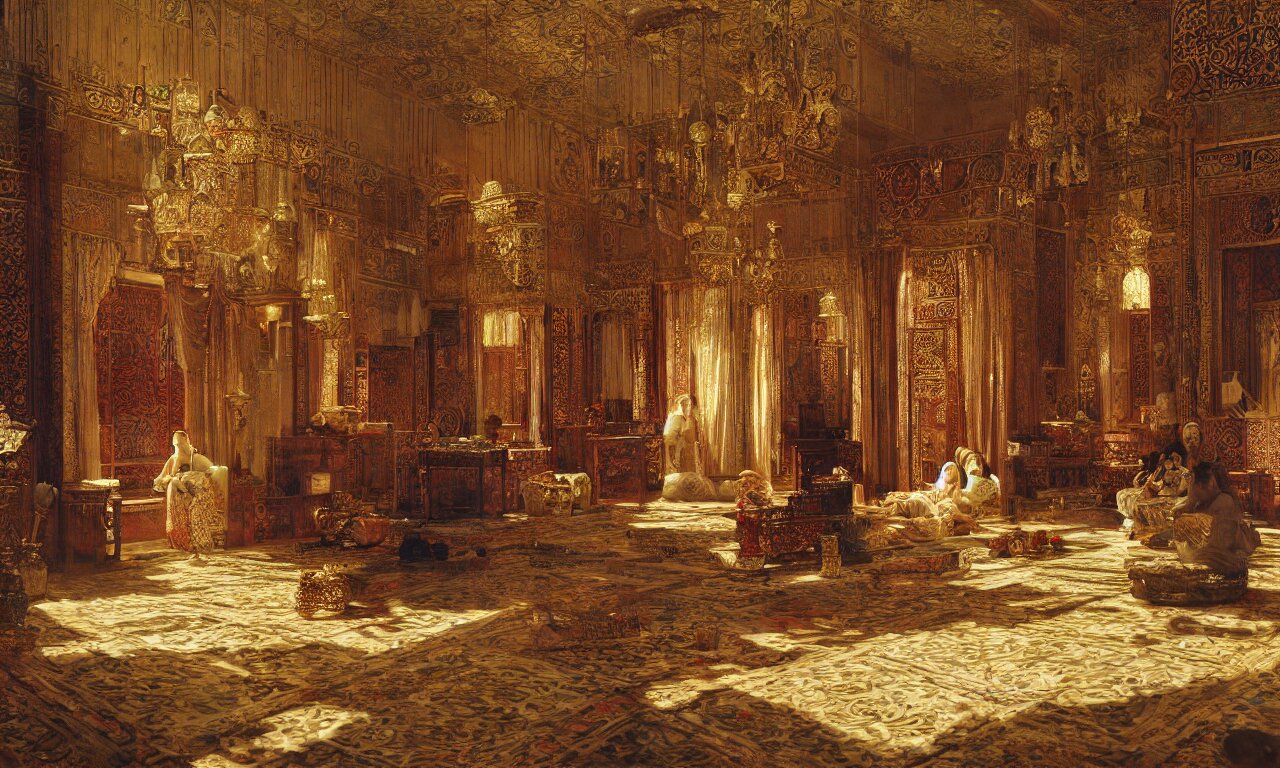

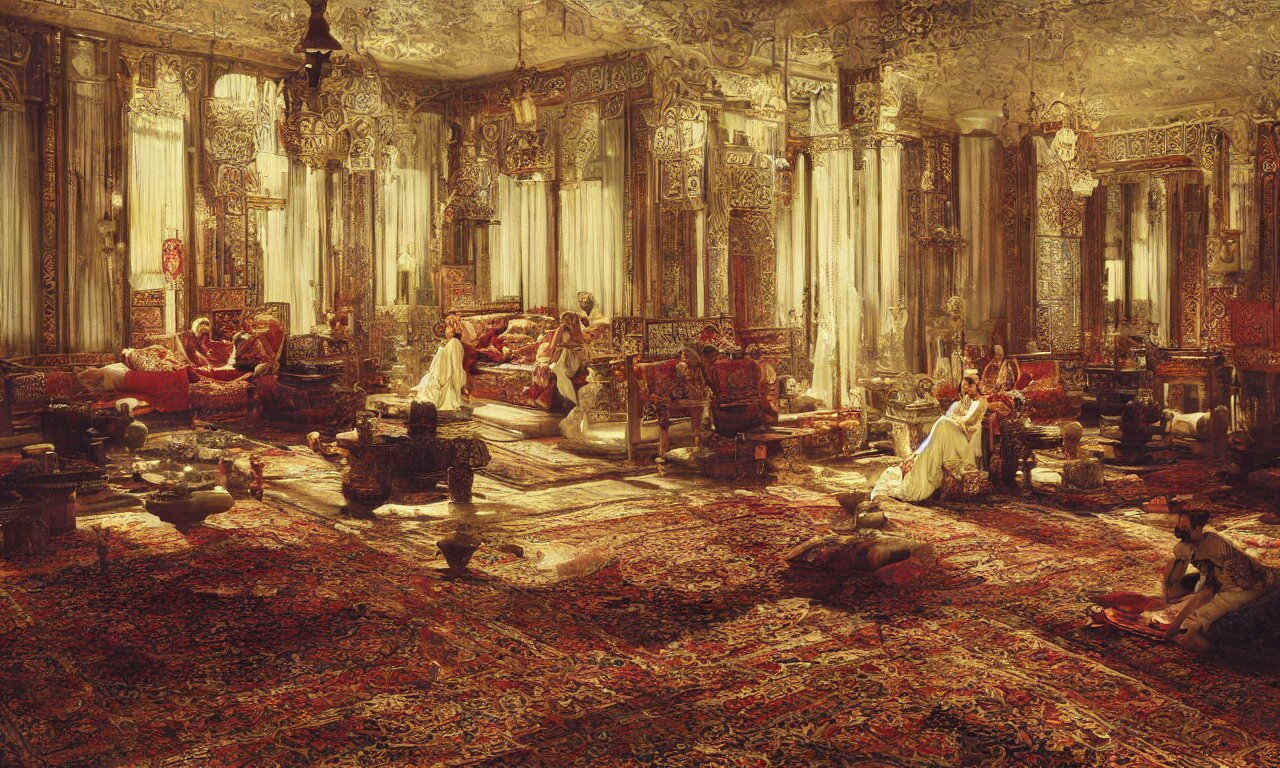

grand dream of ottoman opulence and the splendor of architectural orientalism, art by rudolf ernst, orientalism, hypereralism, ultra hd, 8 k resolution

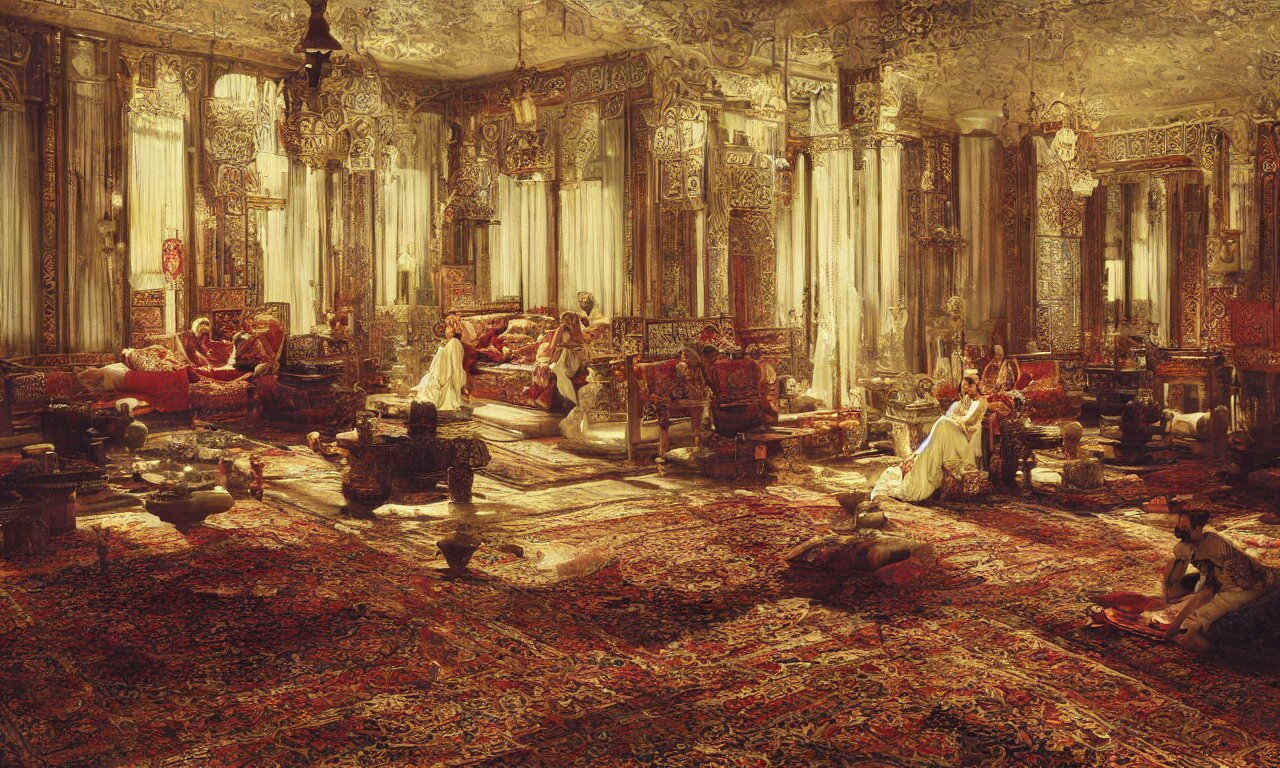

A grand oriental throne room by by feng zhu, unreal engine, god rays, ue5, concept art, wide angle, 4k hd wallpaper

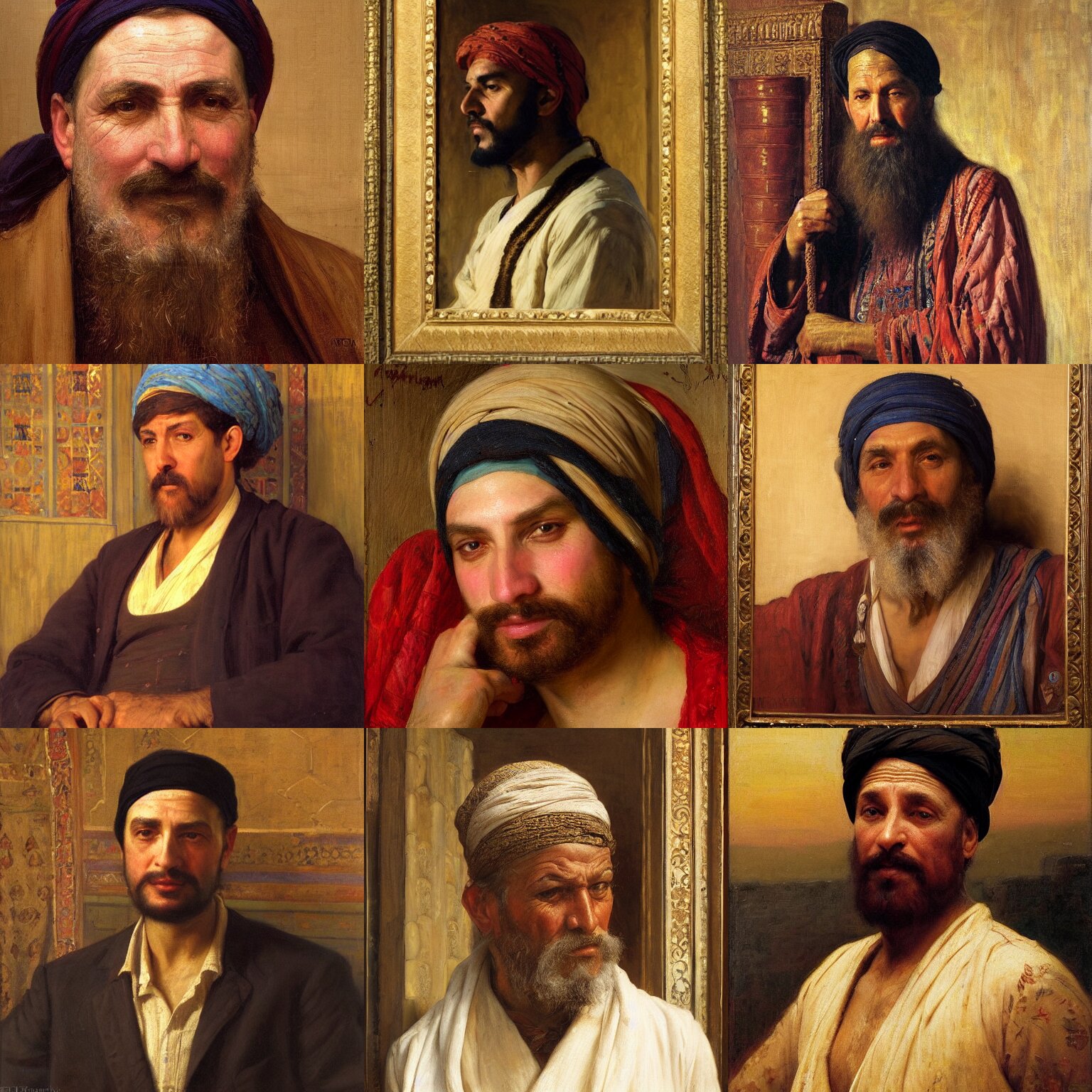

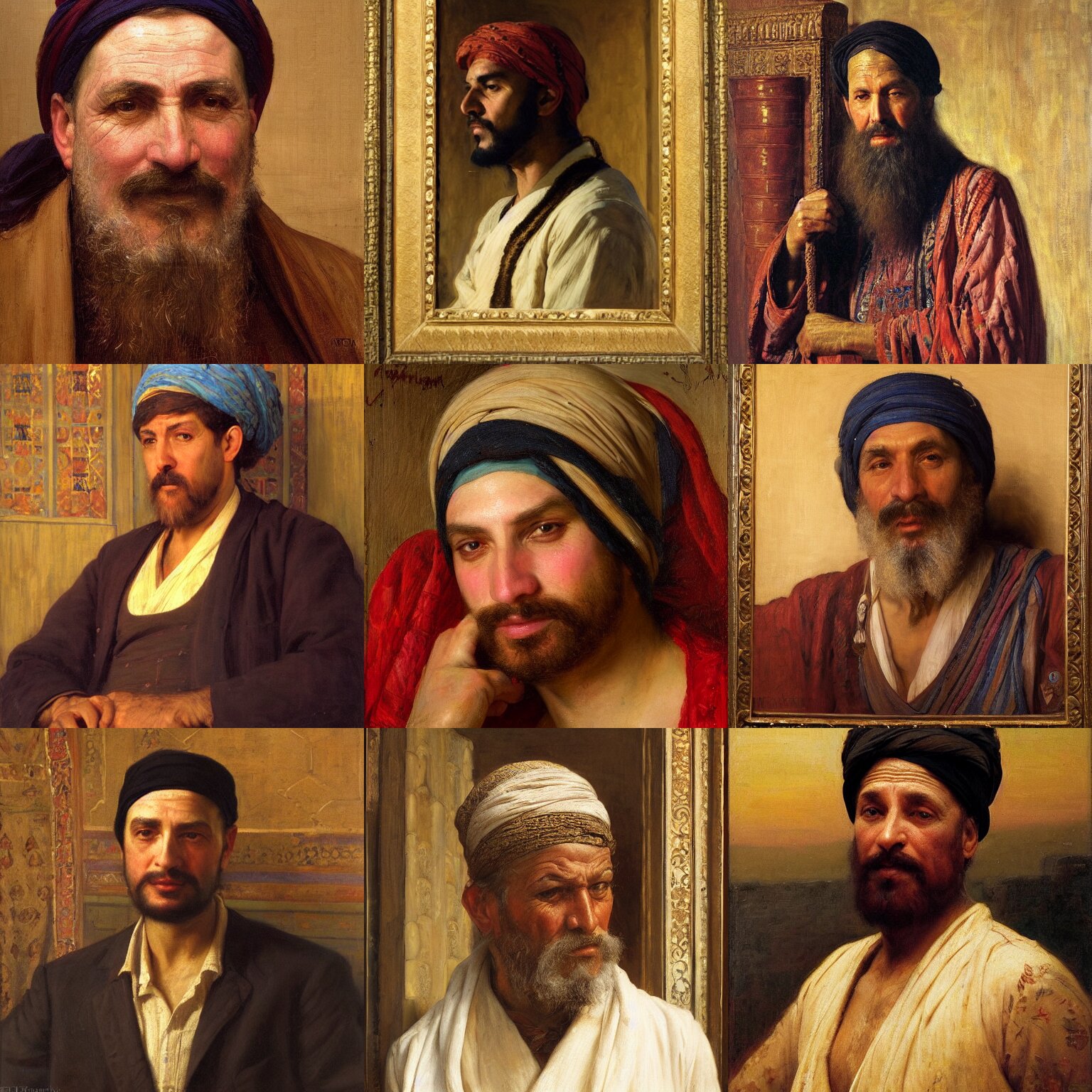

orientalism face portrait of a greedy merchant by Edwin Longsden Long and Theodore Ralli and Nasreddine Dinet and Adam Styka, masterful intricate art. Oil on canvas, excellent lighting, high detail 8k (can be used in rpg portraits it's just needs a little bit polishing)

orientalism face detail of a beautiful woman holding a candle in the dark by edwin longsden long and theodore ralli and nasreddine dinet and adam styka, masterful intricate art. oil on canvas, excellent lighting, high detail 8 k

Prompt: A digital painting of an isometric medieval arabic fantasy dungeon room on a plain black background by justin gerard, paul bonner, 2 d game art, isometric, arabian nights, highly detailed, pale blue backlight, digital art, artstation hd

orientalism painting of a female wizard in a temple face detail by edwin longsden long and theodore ralli and nasreddine dinet and adam styka, masterful intricate art. oil on canvas, excellent lighting, high detail 8 k

grand dream of ottoman opulence and the splendor of architectural orientalism, art by rudolf ernst, orientalism, hypereralism, ultra hd, 8 k resolution

A grand oriental throne room by by feng zhu, unreal engine, god rays, ue5, concept art, wide angle, 4k hd wallpaper

orientalism face portrait of a greedy merchant by Edwin Longsden Long and Theodore Ralli and Nasreddine Dinet and Adam Styka, masterful intricate art. Oil on canvas, excellent lighting, high detail 8k (can be used in rpg portraits it's just needs a little bit polishing)

orientalism face detail of a beautiful woman holding a candle in the dark by edwin longsden long and theodore ralli and nasreddine dinet and adam styka, masterful intricate art. oil on canvas, excellent lighting, high detail 8 k

Last edited:

None

Arbiter

- Joined

- Sep 5, 2019

- Messages

- 2,142

I decided to run a bit of an experiment to see how different the sampling methods really are. I know there is already a comparison out there but I wanted to see more results. I'm not too concerned with speed so I wont be mentioning that. The same prompt and seed was used across all methods and starting at 15 steps, increasing to 30, 45, and then 60.

I uploaded albums of the results for those interested:

PLMS

k_lms

k_heun

k_euler_a

k_euler

k_dpm_2_a

k_dpm_2

ddim

k_lms, k_dpm_2, PLMS: Gives you relatively consistent results across all step settings. Slightly more detail with diminishing results.

k_euler, ddim: Same as above but is blurrier at lower steps, can be useful if you want to introduce fog.

k_heun: Lower step count gives sharper details, more blends and blurs things together.

k_dpm_2_a: Far more stylized, more steps can end up giving you a completely different image.

k_eulera_a: Additional steps result in a different scene as above, lower steps are blurrier ("foggy"), tendency for artifacts at lower steps.

I'm gravitating towards k_eulera_a as it gives me the visual results I desire, despite the artifacts. k_dpm_2_a is good for a sytlized result. The others I'll go through later to figure out how quick they end up being (or I bet someone else already has this info).

prompt: haunted overgrown mossy forest at night, alan lee, oil painting, gothic, high resolution, 4k

width & height: 512 x 512

CFGS: 3

seed: 420522535

# of images to generate: 4

width & height: 512 x 512

CFGS: 3

seed: 420522535

# of images to generate: 4

I uploaded albums of the results for those interested:

PLMS

k_lms

k_heun

k_euler_a

k_euler

k_dpm_2_a

k_dpm_2

ddim

k_lms, k_dpm_2, PLMS: Gives you relatively consistent results across all step settings. Slightly more detail with diminishing results.

k_euler, ddim: Same as above but is blurrier at lower steps, can be useful if you want to introduce fog.

k_heun: Lower step count gives sharper details, more blends and blurs things together.

k_dpm_2_a: Far more stylized, more steps can end up giving you a completely different image.

k_eulera_a: Additional steps result in a different scene as above, lower steps are blurrier ("foggy"), tendency for artifacts at lower steps.

I'm gravitating towards k_eulera_a as it gives me the visual results I desire, despite the artifacts. k_dpm_2_a is good for a sytlized result. The others I'll go through later to figure out how quick they end up being (or I bet someone else already has this info).

Last edited:

- Joined

- Nov 6, 2020

- Messages

- 17,656

This is basically the thought process behind population reductions via vaccines or whatever.We have a growing mass of mostly incompetent humans who only ever were capable of doing things like moving boxes and flipping burgers and they're going to be out of anything to do in their life, forever.

I think it's wasteful and evil. It comes from a lack of understanding of human potential. Many of the people who advocate this also claim to be "masons" who will "enlighten" the world, but they have corrupted the world and lowered the IQ of billions of people just so they'd have access to cheap hamburger flippers. Only now that they think they have a better option do they want to stuff people in pods and feed them bugs until they can figure out a way to deal with their excess workers.

This pessimistic, selfish vision of the future is a dead end. It offers no advancement for humanity as anything other than disposable robot repairmen. If Gene Rodenberry were to have thought like this, the Federation in Star Trek would have been too busy sterilizing every human on Earth once they invented replicators to worry about exploring the stars.

The_Sloth_Sleeps

Arcane

- Joined

- Oct 26, 2016

- Messages

- 2,599

Same old yet again None

TBF a couple of the portraits look ok, but theres always been programs that generate faces. So not won over.

Some of the forest scenes look ok, where you are starring at leaves or noise. Could possibly be used here as loading screen/backdrops.

Everything else requires a lot of work to correct.

The isometric stuff maybe you could chop up for tiles but TBH a huge amount of work, not saving any time IMO.

Can you just generate a regular isometric tile? Say a wall piece or ground, stairs etc?

TBF a couple of the portraits look ok, but theres always been programs that generate faces. So not won over.

Some of the forest scenes look ok, where you are starring at leaves or noise. Could possibly be used here as loading screen/backdrops.

Everything else requires a lot of work to correct.

The isometric stuff maybe you could chop up for tiles but TBH a huge amount of work, not saving any time IMO.

Can you just generate a regular isometric tile? Say a wall piece or ground, stairs etc?

tritosine2k

Erudite

- Joined

- Dec 29, 2010

- Messages

- 1,847

and I thought Perkel was a tech illiteratein comparison to generic mobile game art i think anything created with DALL-E (or even better, Stable Diffusion) is exceptionally good.. but again, it's just the start.. it's not like the first 3D models were amazing either :p This will be bigger than even 3D engines i believe because it's just so easy to use. Maybe we'll see new 2D engines with graphics we didn't think was possible using 2D, there's so much happening right now with it, it's unlikely to slow down any time soon. Every month is a big step forward.

For anything AI, this is a good channel to follow: https://www.youtube.com/c/KárolyZsolnai/videos

None

Arbiter

- Joined

- Sep 5, 2019

- Messages

- 2,142

Closest thing to tile generation I've seen is this. It isn't isometric, but serves as a PoC even if it is a little dated. Given enough time the software may mature to the point where you can actually get the results you desire with relative ease. For now I'll screw around with the image-to-image processor and see if I can force some isometric perspectives.Same old yet again None

TBF a couple of the portraits look ok, but theres always been programs that generate faces. So not won over.

Some of the forest scenes look ok, where you are starring at leaves or noise. Could possibly be used here as loading screen/backdrops.

Everything else requires a lot of work to correct.

The isometric stuff maybe you could chop up for tiles but TBH a huge amount of work, not saving any time IMO.

Can you just generate a regular isometric tile? Say a wall piece or ground, stairs etc?

mkultra

Augur

- Joined

- Feb 27, 2012

- Messages

- 493

you can create anything with ai, you just use an init image and you can have hundreds or thousands of variations of that image and in any style you can imagine, any additions you want. Let it batch process for 10-15 minutes, come back and go through the images and hit delete on the crappy ones.. out of 100 images you're more than likely to have 5-10 great ones.

I could use my own art or someone elses, it will change it beyond recognition, depending on init image strength. Guiding the AI (instead of using noise, which is default) is really the way to use this in the current state, imo.. even something quick in ms paint to guide the AI of what you want is better than noise and hoping to get lucky.

This is (in its current state) all about mass producing, mass deleting and luck. The only skillset is really taste, knowing what to keep and what to delete.

i've done plenty of commission work now and people have been happy. No one cares if you're not sold on AI lol.. it's obviously in its infancy. Every month is another leap forward.

I could use my own art or someone elses, it will change it beyond recognition, depending on init image strength. Guiding the AI (instead of using noise, which is default) is really the way to use this in the current state, imo.. even something quick in ms paint to guide the AI of what you want is better than noise and hoping to get lucky.

This is (in its current state) all about mass producing, mass deleting and luck. The only skillset is really taste, knowing what to keep and what to delete.

i've done plenty of commission work now and people have been happy. No one cares if you're not sold on AI lol.. it's obviously in its infancy. Every month is another leap forward.

Last edited:

J1M

Arcane

- Joined

- May 14, 2008

- Messages

- 14,788

I think what's particular about this technology that has people scared and excited about it is how close the prompt interface is to actually working with a concept artist. It's just missing the ability to segment and iterate on details like "create a light source here and make her eyes green".you can create anything with ai, you just use an init image and you can have hundreds or thousands of variations of that image and in any style you can imagine, any additions you want. Let it batch process for 10-15 minutes, come back and go through the images and hit delete on the crappy ones.. out of 100 images you're more than likely to have 5-10 great ones.

I could use my own art or someone elses, it will change it beyond recognition, depending on init image strength. Guiding the AI (instead of using noise, which is default) is really the way to use this in the current state, imo.. even something quick in ms paint to guide the AI of what you want is better than noise and hoping to get lucky.

This is (in its current state) all about mass producing, mass deleting and luck. The only skillset is really taste, knowing what to keep and what to delete.

i've done plenty of commission work now and people have been happy. No one cares if you're not sold on AI lol.. it's obviously in its infancy. Every month is another leap forward.

Ironically, the same thing has detractors who haven't worked with artists acting dismissive of it. I can only assume they think art directors communicate via mind melds or something.

mkultra

Augur

- Joined

- Feb 27, 2012

- Messages

- 493

I think what's particular about this technology that has people scared and excited about it is how close the prompt interface is to actually working with a concept artist. It's just missing the ability to segment and iterate on details like "create a light source here and make her eyes green".you can create anything with ai, you just use an init image and you can have hundreds or thousands of variations of that image and in any style you can imagine, any additions you want. Let it batch process for 10-15 minutes, come back and go through the images and hit delete on the crappy ones.. out of 100 images you're more than likely to have 5-10 great ones.

I could use my own art or someone elses, it will change it beyond recognition, depending on init image strength. Guiding the AI (instead of using noise, which is default) is really the way to use this in the current state, imo.. even something quick in ms paint to guide the AI of what you want is better than noise and hoping to get lucky.

This is (in its current state) all about mass producing, mass deleting and luck. The only skillset is really taste, knowing what to keep and what to delete.

i've done plenty of commission work now and people have been happy. No one cares if you're not sold on AI lol.. it's obviously in its infancy. Every month is another leap forward.

Ironically, the same thing has detractors who haven't worked with artists acting dismissive of it. I can only assume they think art directors communicate via mind melds or something.

It's not missing that at all

if you already have an image close to what you want, even better, just import it to photoshop cut and paste stuff (does not need to look good at all), guide the AI with that.

Last edited:

mkultra

Augur

- Joined

- Feb 27, 2012

- Messages

- 493

i know, i'm just saying it's even closer than you perhaps thoughtThat sounds great. My point was that the interface is different than working with a real artist for those details.

Dexter

Arcane

- Joined

- Mar 31, 2011

- Messages

- 15,657

Various helpful links for people interested:

IMG2PROMPT, you can Upload an image you have and an algorithm tries to predict keywords you can feed Stable Diffusion to get similar results to it: https://replicate.com/methexis-inc/img2prompt

Links to where you can search the LAION5B Image database, a subset of which Stable Diffusion has been trained on, either upload an image yourself and it will try to find similar pictures along with keyword pairs or search via keyword e.g. "bearded man" or "Scarlett Johannson" or whatever and find pictures the AI was most likely fed during Training along keywords it will recognize: https://rom1504.github.io/clip-retrieval/ https://laion-aesthetic.datasette.io/laion-aesthetic-6pls/images

Searchable Image Browsing/Prompt Sharing sites where people Upload their resulting images along with the prompts: https://lexica.art/ https://www.krea.ai/

Galleries of Artists that Stable Diffusion will recognize and has been trained on along with results you might expect when using them in prompts: https://sgreens.notion.site/sgreens/4ca6f4e229e24da6845b6d49e6b08ae7 https://f000.backblazeb2.com/file/clip-artists/index.html

You can also find a list of about ~3000 artists along with their score/weight and category in some of the WebUI Repos: https://github.com/AUTOMATIC1111/stable-diffusion-webui/blob/master/artists.csv

And you can Search for Artists by Art Movement on several sites like: https://artsandculture.google.com/category/art-movement or https://www.wikiart.org/en/artists-by-art-movement but don't have a guarantee that they were included in the dataset

IMG2PROMPT, you can Upload an image you have and an algorithm tries to predict keywords you can feed Stable Diffusion to get similar results to it: https://replicate.com/methexis-inc/img2prompt

Links to where you can search the LAION5B Image database, a subset of which Stable Diffusion has been trained on, either upload an image yourself and it will try to find similar pictures along with keyword pairs or search via keyword e.g. "bearded man" or "Scarlett Johannson" or whatever and find pictures the AI was most likely fed during Training along keywords it will recognize: https://rom1504.github.io/clip-retrieval/ https://laion-aesthetic.datasette.io/laion-aesthetic-6pls/images

Searchable Image Browsing/Prompt Sharing sites where people Upload their resulting images along with the prompts: https://lexica.art/ https://www.krea.ai/

Galleries of Artists that Stable Diffusion will recognize and has been trained on along with results you might expect when using them in prompts: https://sgreens.notion.site/sgreens/4ca6f4e229e24da6845b6d49e6b08ae7 https://f000.backblazeb2.com/file/clip-artists/index.html

You can also find a list of about ~3000 artists along with their score/weight and category in some of the WebUI Repos: https://github.com/AUTOMATIC1111/stable-diffusion-webui/blob/master/artists.csv

And you can Search for Artists by Art Movement on several sites like: https://artsandculture.google.com/category/art-movement or https://www.wikiart.org/en/artists-by-art-movement but don't have a guarantee that they were included in the dataset

Last edited:

How can ou iterate, though? For instance, if you want to add something, like a tree to an AI generated image, do you just erase the part where you want the tree, and hand draw the tree yourself, and ask the AI to iterate on that?I think what's particular about this technology that has people scared and excited about it is how close the prompt interface is to actually working with a concept artist. It's just missing the ability to segment and iterate on details like "create a light source here and make her eyes green".you can create anything with ai, you just use an init image and you can have hundreds or thousands of variations of that image and in any style you can imagine, any additions you want. Let it batch process for 10-15 minutes, come back and go through the images and hit delete on the crappy ones.. out of 100 images you're more than likely to have 5-10 great ones.

I could use my own art or someone elses, it will change it beyond recognition, depending on init image strength. Guiding the AI (instead of using noise, which is default) is really the way to use this in the current state, imo.. even something quick in ms paint to guide the AI of what you want is better than noise and hoping to get lucky.

This is (in its current state) all about mass producing, mass deleting and luck. The only skillset is really taste, knowing what to keep and what to delete.

i've done plenty of commission work now and people have been happy. No one cares if you're not sold on AI lol.. it's obviously in its infancy. Every month is another leap forward.

Ironically, the same thing has detractors who haven't worked with artists acting dismissive of it. I can only assume they think art directors communicate via mind melds or something.

It's not missing that at allthat's how you guide the AI with the init image, again, you can create anything, and you can direct the AI very, very easily. A crude MS paint drawing of a human with green blobs where they eyes are, this will direct the AI of doing a human, and that it also should have green eyes. A light source, try drawing a sun and with light from it coming onto the subject, or maybe draw white on the black crude human form, make sure to direct it via text too.

if you already have an image close to what you want, even better, just import it to photoshop cut and paste stuff (does not need to look good at all), guide the AI with that.

Anyway, it is not that different from working with artists, as sketching things is also much faster than just using text to communicate.

V17

Educated

- Joined

- Feb 24, 2022

- Messages

- 350

How is it always cartoonists getting so irrationally angry? The pattern repeats, "oh, I made a small controversy, quick, let's use it for advertising". But so far it's never people who make actual art doing it, just mediocre cartoonists.

Bonus points for using the word "hellsite".

Yes, that is basically how it works. It's a bit better when instead of drawing it (unless you're good at drawing) you use something like photobashing and just paste an image of a tree there.

Normally with the text to image process the AI starts with an image of noise and then gradually transforms the noise to create the image that resembles the text prompt. But instead of noise you can use an actual image as the starting point and finetune how much the AI is supposed to change it, plus you can use masks to tell it what areas it can change and what to leave alone. You still have to use the same text prompt for the AI to understand what to do, so in this case you would for example add "with a tree on the right" to the prompt and one of the results would probably work.

Bonus points for using the word "hellsite".

How can ou iterate, though? For instance, if you want to add something, like a tree to an AI generated image, do you just erase the part where you want the tree, and hand draw the tree yourself, and ask the AI to iterate on that?

Anyway, it is not that different from working with artists, as sketching things is also much faster than just using text to communicate.

Yes, that is basically how it works. It's a bit better when instead of drawing it (unless you're good at drawing) you use something like photobashing and just paste an image of a tree there.

Normally with the text to image process the AI starts with an image of noise and then gradually transforms the noise to create the image that resembles the text prompt. But instead of noise you can use an actual image as the starting point and finetune how much the AI is supposed to change it, plus you can use masks to tell it what areas it can change and what to leave alone. You still have to use the same text prompt for the AI to understand what to do, so in this case you would for example add "with a tree on the right" to the prompt and one of the results would probably work.

Dexter

Arcane

- Joined

- Mar 31, 2011

- Messages

- 15,657

img2imgHow can ou iterate, though? For instance, if you want to add something, like a tree to an AI generated image, do you just erase the part where you want the tree, and hand draw the tree yourself, and ask the AI to iterate on that?

Anyway, it is not that different from working with artists, as sketching things is also much faster than just using text to communicate.

If you don't care about an exact result and you found something passable that you want to add something to, but the prompt won't quite take, you can save the Seed and your prompt, modify your initial image and just do it again, you will get something reasonably close.

If you got something that's "perfect" but you want to change a specific thing about it, you will have to mask and paint over the specific area while using the Original image and same prompt on img2img:

Afaik there's already Plugins for Automating some of this and easier Masking, but again Early days: https://github.com/sddebz/stable-diffusion-krita-plugin

https://user-images.githubuserconte...339-9d146a9a-ba9f-4671-9bd8-c8b55fd48ba6.webm

Inpainting: https://github.com/parlance-zz/g-diffuser-bot/tree/g-diffuser-bot-diffuserslib-beta

https://imgur.com/a/pwN6LHB

Last edited:

Vermillion

Educated

- Joined

- Jul 15, 2022

- Messages

- 84

How is it always cartoonists getting so irrationally angry? The pattern repeats, "oh, I made a small controversy, quick, let's use it for advertising". But so far it's never people who make actual art doing it, just mediocre cartoonists.

Bonus points for using the word "hellsite".

If AI causes these "artists" to collectively neck themselves then I'll have considered it well worth the time and money placed in its creation.

Dexter

Arcane

- Joined

- Mar 31, 2011

- Messages

- 15,657

Apparently the result of: "fallout 5 tarkov stalker 2, canon50 first person movie still, ray tracing, 4k octane render, hyperrealistic, extremely detailed, epic dramatic cinematic lighting;

width:768 height:448 steps:50 cfg_scale:10 sampler:k_euler_a"

width:768 height:448 steps:50 cfg_scale:10 sampler:k_euler_a"

RobotSquirrel

Arcane

The hype behind AI is a psyop to acclimate the public to the idea of impersonal intelligence so that the """""""elite""""""" can do what they want and shift the blame for the consequences on AI's purportedly impartial, optimized calculations when things get ugly.

Just saying we were warned about that lol. I think more than anything though it's an attempt to uncouple labor from the elite's social contract.

I'm actually not concerned as an artist because I enjoy the process of making things. It's irrelevant that humans are being replaced they were always going to be.

Apparently the result of: "fallout 5 tarkov stalker 2, canon50 first person movie still, ray tracing, 4k octane render, hyperrealistic, extremely detailed, epic dramatic cinematic lighting;

width:768 height:448 steps:50 cfg_scale:10 sampler:k_euler_a"

Look out Unreal Engine 5, AI is coming for you.

I was just thinking about the technology that is becoming obsolete. Game engines, 3D editors, 2D editors, IDEs, programming languages. Billions of dollars of R&D up in smoke. I also wonder will it make some/all math obsolete? AI doesn't need equations to get things done, since it works similar to our brains.

For the person who thinks I'm way out. I think algorithmic engines and related tools will become obsolete, IF AI rendering speed reaches a high frame rate. John Carmack just started a company to develop AGI, so massive performance increases in these programs could become available in the future. I have also seen AI make 3D animations.

Why do I say no math? Because while these AI are made using math algorithms, they do not generate these pictures using math . Math and algorithms are used to make non-thinking machines perform tasks. Photoshop 3D Studio Max, Unreal uses math and algorithms to make pictures. But an artist making an oil painting does not use math. He just does it. AI has no need for math and algorithms to perform a task, since it is modeled on the human brain. Like us, after training on a skill, it just does it. For example, its not doing calculations using triangles, meshes, matrix transformations, and light equations, like a game engine when it draws a picture. It just does it, like a human painter would.

Last edited:

Dexter

Arcane

- Joined

- Mar 31, 2011

- Messages

- 15,657

I think you're way off on a temporal frame. Maybe like two decades from now something like what you're talking about might exist, but so far I can only render 1FPS at 512x512 on a 3080TI with 12GB VRAM under the best of circumstances.I was just thinking about the technology that is becoming obsolete. Game engines, 3D editors, 2D editors, IDEs, programming languages. Billions of dollars of R&D up in smoke.

Open Sourcing this instead of trying to lock it up on some proprietary website and filtering prompts like DALL-E and Midjourney or whatever they're all called was definitely a big move though. Lots of people are playing around with it, doing Optimizations and iterating and making Plugins for other Tools based on it and whatnot. You can already make short Videos or Animations with something that's called "Deforum" and similar interpolation Tools: https://replicate.com/deforum/deforum_stable_diffusion

What it seems to do is render a frame, then shift the image and render the next and interpolate between them. But so far it lacks consistency between frames and obviously any kind of active user input that would allow a player to directly interact in a "game world", someone else was working on a Blender Plugin so you can generate a 2D image from a 3D space:

Last edited: